AI Ethics Today

Spring 2025

James Brusseau

jamesbrusseau@gmail.com

jbrusseau@pace.edu

UniTrento webpage

Objectives

1. |

Through case studies and discussion, we will explore the principles of AI ethics and apply them to today's technology. We will learn to talk about the human side of AI innovation. |

|

2. |

Survey the primary debates in AI ethics. |

|

3. |

Students will be equipped to produce AI ethics evaluations of their own work, to respond to ethics committees, and to perform AI ethics audits / algorithmic impact statements. |

Content

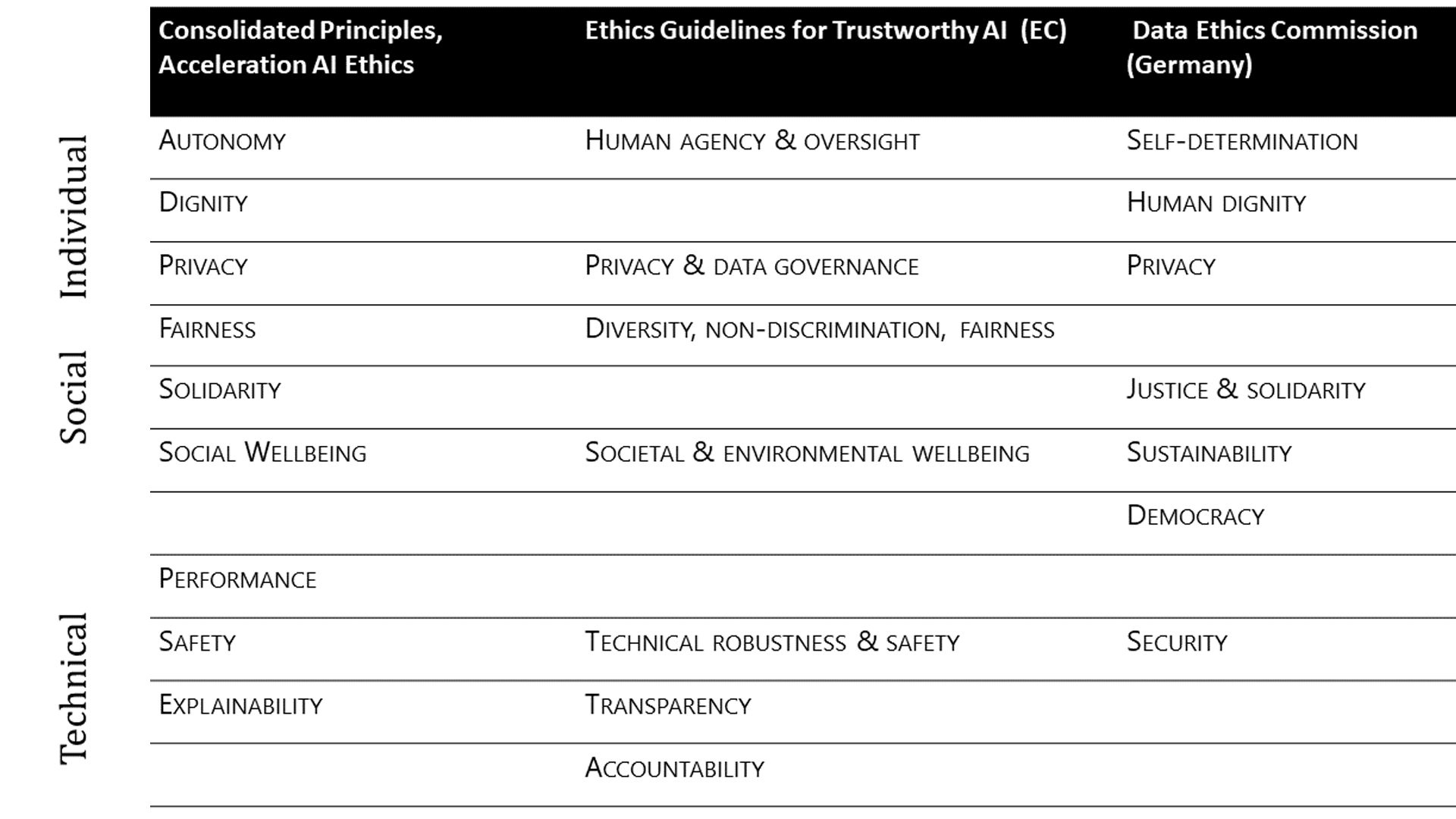

Ethics principles employed in today's Computer Science, Engineering, and AI ethics.

- Individual principles: Autonomy, Human Dignity, Privacy

- Social principles: Fairness, Solidarity, Sustainability/Social wellbeing

- Technical principles: Performance, Safety, Accountability

Selected contemporary debates in the philosophy and ethics of AI.

- Privacy versus social wellbeing, User autonomy, freedom and authenticity, Statistical fairness, Causal AI, Explainable AI, Data ownership, Digital twins, and similar.

- Some examples here.

Teaching method

The teaching method is classroom discussion of case studies, and lectures presented by the professor. There are no required texts and no homework - but attendance at seminar sessions is required because the course's main ideas will be developed collaboratively, through the seminar discussions. AI ethics is learned by doing AI ethics.

Schedule

Wednesday, May 7, 4.30 p.m. - 6.30 p.m.

Autonomy (Course Introduction)

Thursday, May 8, 4.30 p.m. - 6.30 p.m.

Dignity

Friday, May 9, 4.30 p.m. - 6.30 p.m.

Privacy

Wednesday, May 14, 4.30 p.m. - 6.30 p.m.

Fairness

Thursday, May 15, 4.30 p.m. - 6.30 p.m.

Equity/Solidarity

Friday, May 16, 4.30 p.m. - 6.30 p.m.

Social Wellbeing

Wednesday, May 21, 4.30 p.m. - 6.30 p.m.

Explainability + Safety Video of session is here.

Thursday, May 22, 4.30 p.m. - 6.30 p.m.

Performance + Cases: Images, Fake Drake + AI Audits Video of session is here.

Wednesday, May 28, 4.30 p.m. - 6.30 p.m.

Student Presentations

Thursday, May 29, 4.30 p.m. - 6.30 p.m.

Student Presentations

Assessment

Students will present a power point / poster presentation. It will be an AI ethics evaluation of an AI application. The AI application may be a tool the student is developing in their own work, or it may be a publicly known artificial intelligence application (ChatGPT, for example, or smart glasses, or Tesla and autopilot). The presentation will last 15 - 20 minutes plus 5 - 10 minutes of questions.

Students will be graded on their ability to locate the ethical dilemmas that arise around AI technology, and their ability to discuss the dilemmas knowledgeably. There are no right or wrong answers in ethics, but there are better and worse understandings of the human values that guide and justify decisions.

Because the main ideas will be developed through classroom discussion, attendance to at least 80% of seminar sessions is required in order to do the final presentation.

Bibliography

The bibliography will be the seminar sessions and decks.

AI Ethics

Principles

Autonomy/Freedom

- Giving rules to oneself. (Between slavery of living by others' rules, and chaos of life without rules.) Kant.

- Freedom maximization / Freedom limited only by freedom. (Do what you want up to the point where it interferes with others doing what they want.) Locke.

- Practical independence: Physically free (not compelled), Mentally informed (not misinformed), Psychologically reasonable (not drugged or altered).

- People can escape their own past decisions. (Dopamine rushes from Instagram Likes don’t trap users in trivial pursuits.)

- People can make decisions. (Amazon displays products to provide choice, not to nudge choosing.)

- People can experiment with new decisions: users have access to opportunities and possibilities (professional, romantic, cultural, intellectual) outside those already established by their personal data.

Dignity

- Humans hold intrinsic value: they are ends in selves and not only means to other ends. People are not tools that can be simply used. People are not replaceable like commodities. Kant.

- People treated as individually responsible for – and made responsible for – their choices. (Freedom from patronization, condescension, and pity.) Kant, Nietzsche.

- The AI/human distinction remains clear. (Is the voice human, or a chatbot?)

Privacy

- Control over access to your personally identifying information. Westin.

- People can maintain multiple, independent personal information identities. (Work-life, family-life, romantic-life, can remain unmixed online.)

Fairness

- Equals treated equally and unequals treated proportionately unequally, within decision domain. Aristotle.

- Process justifies the outcome, as opposed to outcome justified the process.

- Equality applies to opportunities, not outcomes.

- Equality about verbs (what you can do), not nouns (who/what you are).

- Society is a collection of individuals, as opposed to individuals being aspects of a larger society.

- Ideal treatment of individuals dictates subsequent society, as opposed to ideal vision of society dictating subsequent treatment of individuals.

- Paradigmatic case (where most everyone wants fairness, not equity): the Olympics.

- Everyone can advance, as opposed to no one left behind.

Equity/Solidarity

- Greatest advantage to the least advantaged. (Max/Min distribution.) Rawls.

- Outcome justifies the process, as opposed to process justifying the outcome.

- Equality applies to outcomes, not opportunities.

- Equality is about nouns (who you are), not verbs (what you can do).

- Individuals are aspects of a larger society, as opposed to society being a collection of individuals.

- Ideal vision of society dictates subsequent treatment of individuals, as opposed to the ideal treatment of individuals dictating subsequent society.

- Paradigmatic case (where most everyone wants equity, not fairness): a family

- No one left behind, as opposed to everyone can advance.

Social wellbeing/Sustainability

- Greatest good to the greatest number. Utilitarianism, Bentham, Stuart Mill.

- Good understood as good or happiness, and in reciprocal relation with pain and suffering.

- Individual subordinated to the collective.

Performance

- Accuracy and efficiency in completing the assigned task.

-

End-in-self, not mediated value. (How well task is done, regardless of task's desirability or downstream effects in the world.)

- Engineering evaluated like art, as intrinsic value, not in terms of utility.

- Optimization in terms of sensitivity and selectivity, robustness, scalability.

Safety

- Predictable, controllable, and aligned with human values.

-

Risks and harms minimized, even when confronted with unexpected changes, anomalies, and perturbations. Robust and resilient.

- Tesla that functions well in San Francisco also does well in Trento, Italy.

- Mental health conversational agent produces no shortcircuits across range of patients/vulnerabilities.

Explainability/Accountability

- Explainability: Describe why/how output was produced in the languages of the various stakeholders

- User: interpretability (''What do I need to change about myself to get a different result?'')

- Developer: transparency (''What do I need to change in the Al to get a different result?'')

- Lawyer: accountability (''Who can I credit/blame for Al performance?'')

-

The Delphic dilemma: Which is primary, making AI knowledge production better, or knowing why AI knows? What’s worth more, understanding or knowledge? (Knowing, or knowing why you know?)

- If an AI picks stocks, predicts satisfying career choices, or detects cancer, but only if no one can understand how the machine generates knowledge, should it be used?

- Accountability gap: Case where tool not explainable/impossible to attribute responsibility for action.

- As a condition of the possibility of producing knowledge exclusively through correlation, AI may not be explainable.

- Accountability gap dilemma: Which is primary, making AI better, or knowing who to blame, and why, when it fails?

- Accountability ambiguity: A driverless car AI system refines its algorithms by imitating driving habits of the human owner (driving distance between cars, accelerating, breaking, turning radiuses). The car later crashes. Who is to blame?

- Redress-by-design: Ability to attribute responsibility and compensate harm engineered from beginning.

Compared to others, our principles lean toward human freedom/libertarianism, and are more streamlined. Small differences.

Ethics Guidelines for Trustworthy AI

AI High Level Expert Group, European Commission

https://ec.europa.eu/digital-single-market/en/news/ethics-guidelines-trustworthy-ai

European Ethical Charter on the Use of Artificial Intelligence in Judicial Systems and their Environment

European Commission for the Efficiency of Justice

https://rm.coe.int/ethical-charter-en-for-publication-4-december-2018/16808f699c

Ethical and Societal Implications of Data and AI

Nuffield Foundation

https://www.nuffieldfoundation.org/sites/default/files/files/Ethical-and-Societal-Implications-of-Data-and-AI-report-Sheffield-Foundat.pdf

The Five Principles Key to Any Ethical Framework for AI

New Statesman, Luciano Floridi and Lord Clement-Jones

https://tech.newstatesman.com/policy/ai-ethics-framework

Postscript on Societies of Control

October 1997, Deleuze

/Library: Deleuze, Foucault, Discipline, Control.pdf

A Declaration of the Independence of Cyberspace

John Perry Barlow

https://www.eff.org/cyberspace-independence